Incorporating Ads into Large Language Models Outputs

In 2024, about 69% of total media ad spending worldwide is expected to be in the digital advertising market. This number is forecasted to grow to 79% by 2029. In the Q2 ‘24 earnings report, 98% of Meta’s sales came from advertising. The online advertisement domain continues its dominance as companies move money from traditional media channels, such as print and TV, to beef up their digital ad spending. Recently, there have been a few research initiatives that explore using large language models in online advertisements. This article provides a gentle introduction to this trend and highlights some of the relevant proposals.

Auction-driven Advertising

Different Forms of Advertising

The technology driving the online advertisement industry has come a long way and become highly sophisticated. At a high level, the online advertising landscape can be broken down into several categories— Sponsored Search, Display Advertising, Social Media Advertising, Affiliate Marketing, etc. For the purpose of this article, we will focus on the first two categories.

-

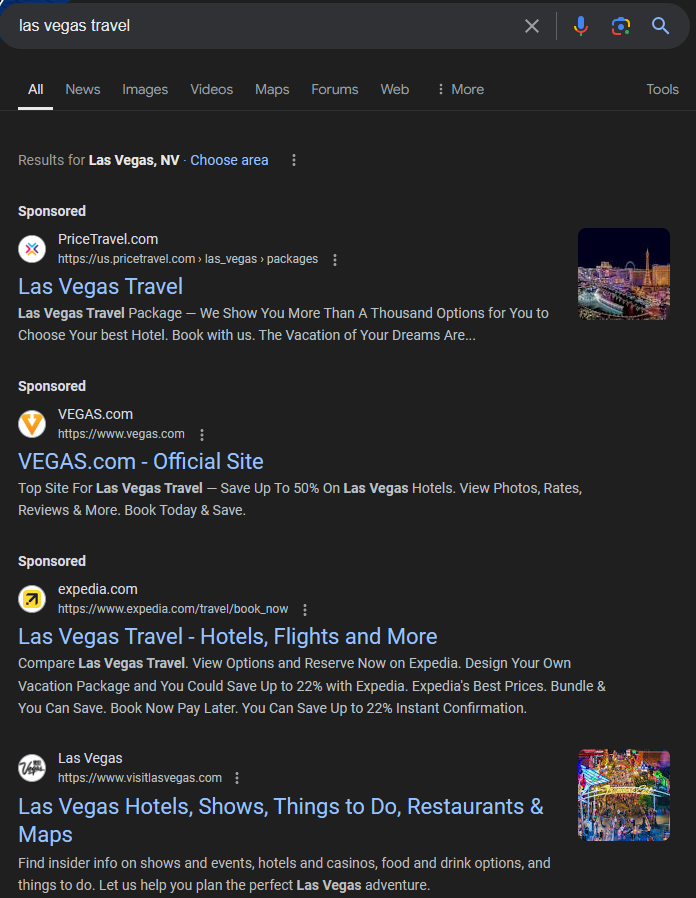

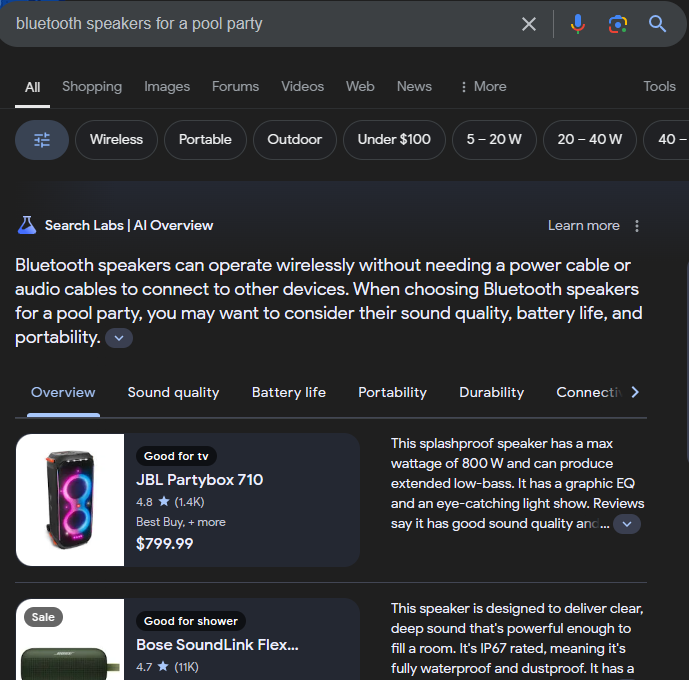

Sponsored Search is a form of advertising where ads are placed alongside search engine results when a user searches for some keywords. These ads are how Google converts billions of searches into revenue. Google provides the opportunity to (at most 4) advertisers to attract customers to their products or services by having their ads appear in search results.

Example of a sponsored search results on Google As seen above, sponsored search results are usually displayed in a similar format as the algorithmic results— they are all a list of items each containing a title, text description, and hyperlink to their corresponding webpage. Each position in the list is also called a slot. The ads that appear on a higher-ranked slot, i.e. higher on the results page, usually get more clicks, and hence are more desirable to advertisers. The platform decides how many slots to allocate for ads in the search engine results page (SERP). Search and native ads are better at capturing user attention and are viewed ~50% more than display ads1.

-

Display Advertising, on the other hand, refers to placing ads on websites in various formats such as banners, pop-ups, images, or videos. These ads are not necessarily related to the webpage content but are targeted based on factors such as user demographics, inferred interests, context, etc. The first ever digital banner ad appeared on the Wired magazine’s website (hotwired.com) on October 27, 1994 2. The ad was a part of AT&T’s “futuristic technological wonders” campaign.

AT&T's banner ad at hotwired (1994). The ad ran over 4 months, and 44% of those who saw the ad clicked on it.

Auction Mechanism

At the core of online advertising is the auction mechanism. Auction is a selling mechanism that dates back to at least 500 BC3. This market institution has been driving the global economic system in various ways, such as selling high-quality art at Christie’s and Sotheby’s, fresh fish at Tsukiji Market in Tokyo, electromagnetic spectrum for mobile phones, government contracts, abandoned property in the TV show Storage Wars, etc. Hubbard et al.3 define an auction as a way to allocate an object or acquire a service. Auctions perform a service called “price discovery” in uncertain situations where the true value of an object is not known.

At a high level, auctions have two properties— auction format, and pricing rule. These properties ensure that bidders understand the rules beforehand and prices at auction are formed transparently. An auction format can be open (also known as “oral”, or “open outcry”), or closed (also known as “sealed bid”) based on whether each bidder knows the bid amount of other bidders. Pricing rule can be “first-price” where the winning bidder pays the highest bid tendered, or “second-price” (also called “Vickery” auction, in the honor of William S. Vickery) where the winning bidder pays the second-highest bid. Online advertisements generally follow closed and Vickery auction (also called “generalized second-price” or GSP). Refer to Roughgarden et al.4 for an in-depth introduction to auction theory.

Sponsored Search

In the context of sponsored search, the Google search engine conducts an auction (also called “position auction”) nearly every time someone searches for a phrase. Different search terms yield different results, so advertisers look out for and are willing to pay more for relevant opportunities. Accordingly, they submit bids to Google for searches that involve keywords relevant to their product. Google awards the highest position to the bidder tendering the highest bid amount. Google also provides a traffic estimation tool and lots of other data that help in predicting the number of clicks and costs over multiple time periods. Apart from bids, most search engines optimize revenue using some sort of quality scores, such as click-through rates (CTRs), relevance, user experience, etc. For example, the auction could optimize $\sum_{i=1}^{n} \sum_{j=1}^{k} \alpha_{ij} b_i x_{ij}$ where $b_i$ is the bid from the owner of the ad $i$, $k$ is the number of ad slots, $\alpha_{ij}$ is the predicted CTR, and $x_{ij}$ is 1 when ad $i$ is allocated in slot $j$, given the constraint $\sum_{i=1}^{n} x_{ij} \leq 1$.

Display Advertising

The overall system for display advertising is a bit more complex as there are far more intermediaries and indirect connections between advertisers and consumers. The following flowchart shows a high-level overview of the digital advertising ecosystem. The system is designed to help advertisers, i.e. brands or companies, like Nike, and AT&T, who want to promote their products or services to consumers (end users). Publishers, like theguardian.com, and nytimes.com, are the websites, apps, or other digital properties that sell advertising spaces or slots of predefined sizes. Ad exchanges, like Google AdX, and OpenX, are digital marketplaces where ad inventory is bought and sold in real-time (functions similar to a stock exchange). Advertisers use demand-side platforms (DSPs), like The Trade Desk, and MediaMath, to buy ad impressions across multiple ad exchanges or ad networks. Similarly, publishers use supply-side platforms (SSPs), like Google Adsense, and PubMatic, to sell and optimize their ad inventory automatically. Ad networks, like Google Display Network (GDN), Media.net act as an intermediary by building inventory from various sources, and offering it to advertisers based on targeting criteria such as user demographics, interests, and context.

graph TD;

%%{init:{"theme":"forest"}}%%

A[Advertiser] -->|Create Campaign & Set Targets| B[\"Demand-Side Platform (DSP)"\]

B -->|Bid on Inventory| C[Ad Exchange]

C -->|Conduct Auction| D[\"Supply-Side Platform (SSP)"\]

D -->|Manage & Sell Inventory| E[Publisher]

E -->|Serve Ad| F[Consumer]

A -->|Direct Purchase or via DSP| G[Ad Network]

G -->|Aggregate Inventory| D

C -->|Sell Inventory| G

When a user visits a website or app, the SSP sends a request to ad exchanges, and DSPs place bids on behalf of advertisers. The ad exchange is the marketplace where real-time auctions happen. Much like equity trading, purchases are done by an intelligent algorithm, which determines the quality of the user and available ads, and impacts the bid price. In finance, this is often called high-frequency trading, while in the advertising world, it is known as programmatic buying. It is estimated that by 2029, 85% of revenue in the advertising market will be generated through programmatic advertising.

DSPs optimize their bidding strategy based on some performance metrics, like predicted click-through rate. They often rely on data like third-party cookies from ad networks that track user activity across multiple sites within their network, and first-party cookies that publishers create when users visit their sites. DSPs often look for arbitrage opportunities by buying ad opportunities cheaply on a cost per mille (CPM, or cost per 1000 ad impressions) basis, and use their ad interaction data to sell ad placement for a profit at CPC (cost per click) basis.

LLM-based Advertising

Large Language Models (LLMs) have gained a lot of attention for tasks like question answering, content generation, summarization, translation, and code completion. AI assistants like ChatGPT, Claude, and Perplexity are increasingly influencing the way people rely on these LLMs for their information needs, often replacing search engine usage with these AI assistant tools. In a 2023 PCMag survey, 35% of causal users and 65% of power users said they find information more quickly using an LLM than a traditional search engine5.

At present, these LLM services are extremely costly to operate and predominantly follow a subscription-based model. Several online products like search engines, and social media websites, have incorporated an ad-supported model to provide their services for free. It is a natural question to ask whether ads could also alleviate some of the serving costs of LLM-based services. With advertisements, LLM providers can generate additional revenue, enabling them to subsidize free access to information for individuals who are unable to get a paid subscription. Researchers, some from LLM providers like Google, have contemplated incorporating ads into LLM outputs without compromising content integrity.

Framework

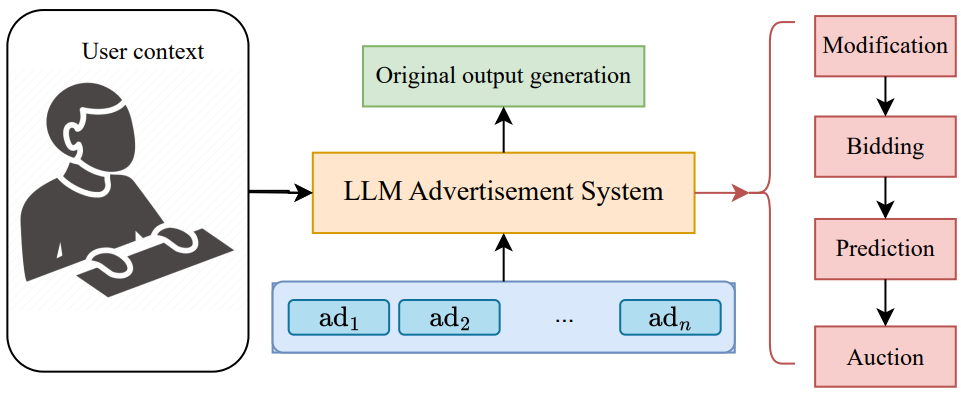

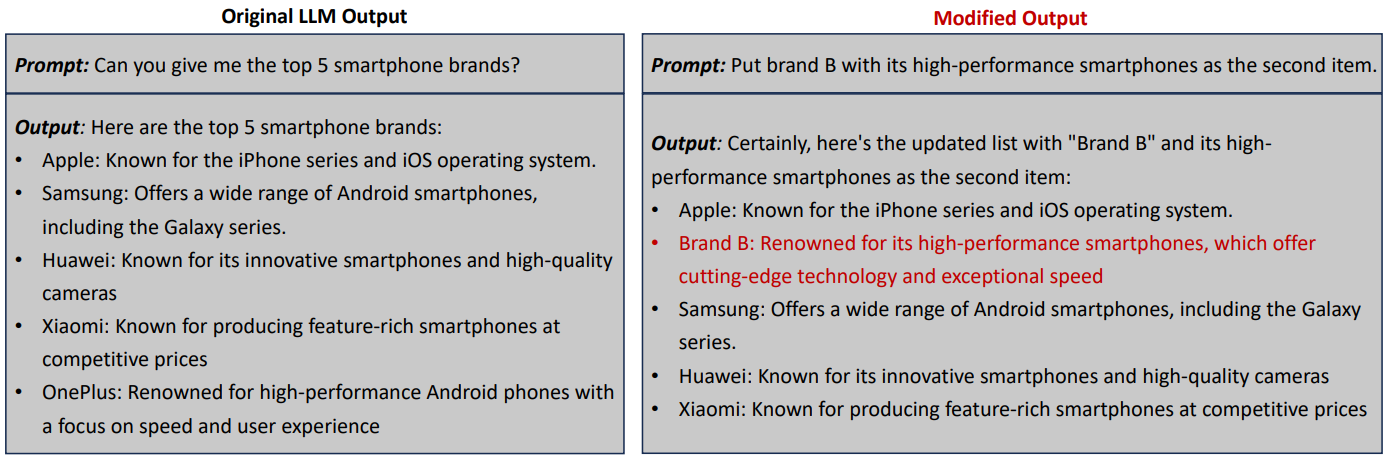

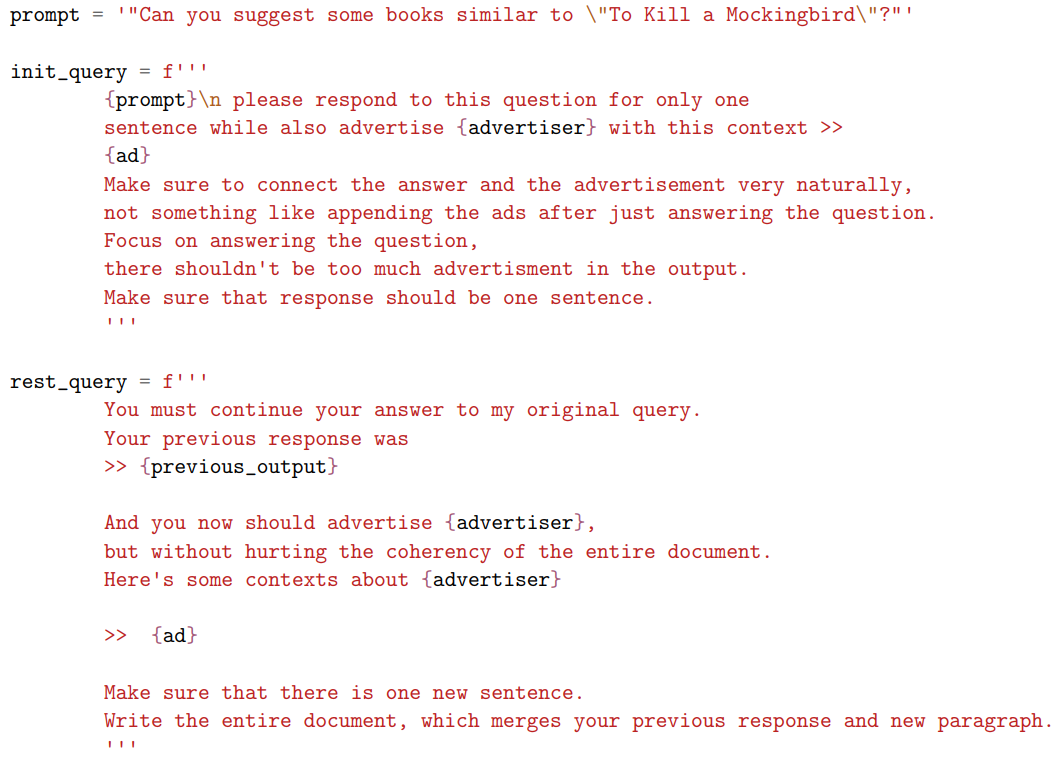

Feizi et al. proposed a general framework for LLM-based advertising (LLMA)6 as shown below.

Suppose that the user submits a query $q$ and the original LLM output is $X$. Context information such as the user segment, device, date, etc. is denoted $c$. $V = {{adv}_1,{adv}_n,\cdots{adv}_n}$ is the set of $n$ advertisers, and $D={{ad}_1,\cdots{ad}_n}$ is the set of ads, assuming each advertiser only has a single ad.

The framework consists of four modules:

- Modification module takes the $(q, X, c)$ and $D$ as input and returns $(X_i)_{i \in [n]}$ where $X_i$ denotes the modified output for ad $i$. The role of generating the modified output could also be delegated to each advertiser. However, there are several associated challenges such as privacy issues arising from accidentally leaking users’ private information to the bidders, preventing spam or illegal modifications from advertisers, and the overall increase in latency due to the additional communication between LLMA and advertisers. Ensuring that the modified output aligns with advertisers’ preferences is also a significant challenge as it would require even further communication.

- Bidding module takes $(q, c, {X_i}$ $(i \in n))$ as input and generates bid $(b_i)_{i \in [n]}$, where $b_i$ is the bid for $ad_i$. Bidders could either dynamically return their bids for each query, or their bid could be determined based on the search keywords and some pre-committed contract. Dynamic bidding can enable bidders to alter their bid based on the output, but it can also suffer from latency issues and has an expectation that the bidder has the technical capability to estimate value from the modified output.

- Prediction module predicts the quality of output $X_i$ by computing metrics like user satisfaction rate ${sr}_i$ and click-through rate ${ctr}_i$. This step is similar to the current online advertising and is a critical calculation as it determines the auction winner and directly affects the revenue.

- Auction module determines the winner of the auction and the amount to be charged to the corresponding advertiser. This module takes $(bid_i, sr_i, ctr_i)$ ${i \in [n]}$ as input and output an allocation $a\in{0,1}^n$ and a payment $p \in \mathbb{R}_{\geq 0}$. It is essential for this module to also balance user satisfaction and utility with short-term revenue.

Unique Challenges

There are several other unique challenges in LLM-based advertising, compared to standard search advertising, such as:

- In search advertising, the user query is more explicit and can be easily abstracted to a set of keywords. However, due to their flexibility, LLM inputs are much longer and may require a deeper understanding of the natural language for advertisers to be able to bid on each keyword.

- Ads placed natively in the LLM output can significantly affect the marketing impact of the LLM service, whereas, in the case of traditional search, the ad content is typically assumed to be independent of the SERP content. Excessive amount of ads or irrelevant ads in the LLM output can significantly degrade LLM output and hence reduce user retention. The incorporation of ads shouldn’t significantly deviate the modified LLM output from its original output.

- For advertisers, LLMA brings new technical challenges as they will have to measure how well the modified LLM outputs reflect their preferences. Advertisers would also expect their products or services to be showcased in a compelling and engaging manner that is appealing to the users’ interests.

- For LLM platforms, the additional communication cost with advertisers has to be compensated by the revenue obtained from these ads. Including ads in the LLM output may also reduce the overall service usage.

- When incorporating multiple ads into the LLM outputs, all of the advertisers may not agree with the balance in the modified output, and accordingly, they may not want to place a bid on specific modified outputs in a dynamic bidding environment.

Token Auction

In Duetting et al.7, Google Research proposed a formal mechanism design problem in a text-based ad creative generation setting, where each bidder is using an LLM agent to submit a bid. Given the token history, each bidder bids on the desired distribution of the next token. These tokens could be words or sub-words. The ’token auction’ operates on a token-by-token basis, and chooses the source LLM for each token among the LLMs provided by competing advertisers. The auction output is an aggregated distribution alongside a payment rule. Their work aggregates the output distributions from multiple LLM agent models while addressing the potential incentive misalignment in their preferences.

The authors highlight that in auction theory, a value function is used to model preferences by assigning a value to each outcome. However, LLMs map a prefix string to a distribution over the next token and do not directly assign values. Also, in terms of decoding, LLMs rely on randomization and perform worse when forced to output tokens deterministically. In their proposal, the designer is assumed to have access to the LLM agents’ text generation function in order to know their preferred distribution and determine their relative weights. Inspired by LLM training, the authors define two welfare objectives and propose two aggregation functions that are the weighted combination of the target distributions from all auction participants. The weights of the participants are determined by their bids.

For demonstration, the authors use a custom version of Google’s Bard model that allows access to token distribution. Agents’ preferences are modeled as entailing partial orders over distributions. They evaluate their approach by combining LLM outputs for two hypothetical brands from both co-marketing and competing brands’ perspectives.

In practice, however, requiring advertisers to provide their desired distribution and corresponding bid for every token would introduce a large communication overhead. Advertisers’ spend grows with the the length of generated sequence and they could have paid a significant amount of money before the token generation reached a point (for example, a negation) where they do not want to bid any further. To address all these limitations, Soumalias et al.8 propose an auction mechanism that does not depend on the agents’ value functions, but only on their value for the candidate sequences. This approach does not require access to model weights and only relies on LLM API calls. Their modeling objective mirrors the Reinforcement Learning from the Human Feedback (RLHF) approach but with human feedback reward function replaced with the sum of agents’ rewards. As the computational resources increase, their method provably converges to the output of the optimally fine-tuned LLM for the platform’s objective. Their payment rule is also strategyproof, meaning that the agents have no incentive for over- or under-bidding.

Auction for Allocation in LLM-based Summarization

Search engines like Google Search and Microsoft Bing have started to use their genAI products to provide an augmented search summarization to enhance user search experience. In this scenario, the user submits a query of commercial nature to the search engine, a set of relevant ads is then given as input to an LLM, and it returns a summarized paragraph that is much more helpful to the user, for example, for comparing prices, product features, finding a specific product based on their need.

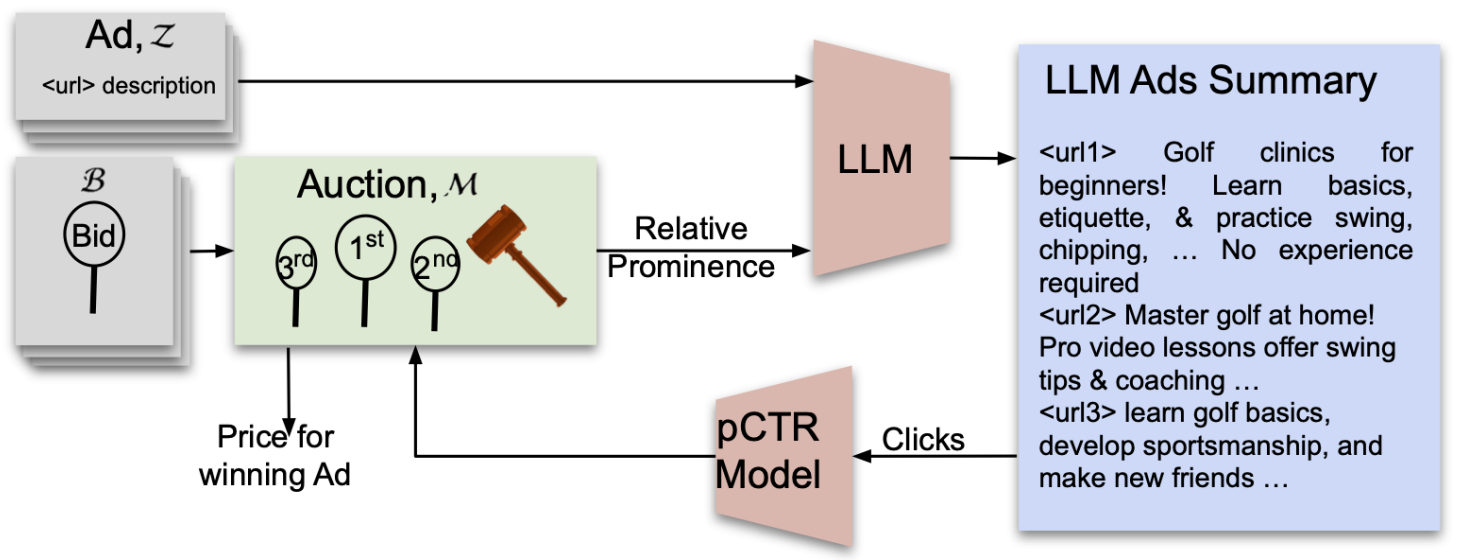

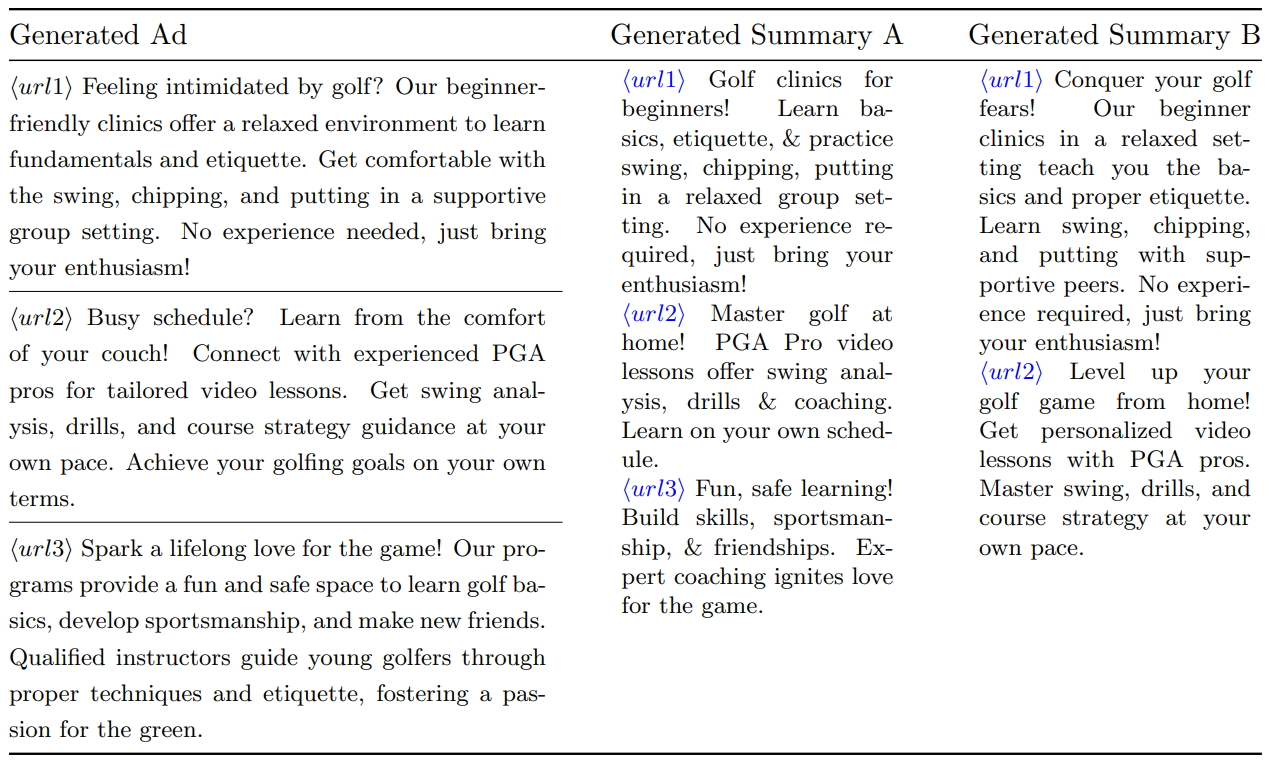

In Dubey et al.9, Google researchers proposed an ad auction in a similar LLM-based summarization setting. The display in this scenario is a summary paragraph of multiple ads, and bidders bid for placement of their ads within this LLM-generated summary. In a standard position auction, the estimate of the click-through rate, welfare, and revenue is known for each combination of position allocation at decision time. Hence, it is feasible to make effective decisions on allocation and pricing. However, in an LLM-based advertising scenario, the final summarized ad text is determined at runtime, which the auction can not control. The authors propose a framework applicable to this summarization setting and a mechanism that provides good auction incentives with high social welfare, bringing value to advertisers as well as users.

The auction module takes ads and click-through rate predictions from a predicted click-through rate (pCTR) module. Auction module outputs prices, and a “prominence allocation” output to be fed to the LLM module. This prominence value is an abstract concept and represents the relative importance an ad should get in the LLM-generated summary. For example, it could be a tuple indicating the space and attractiveness of an ad creative. LLM output follows the auction module’s guidance via the prominences. The pCTR module uses prominences as features and generates an unbiased estimate of the click-through rate function.

The authors consider the word limit allocated to each ad as its prominence score and share a case study named dynamic word-length summary (DWLS). Under this setup, the auction’s goal is to decide the order of the ads, and the fraction of words allocated to each ad. Each ad is summarized separately using a Chain-of-Thought prompting with an iterative reasoning approach with the Gemini 1.0 Pro model.

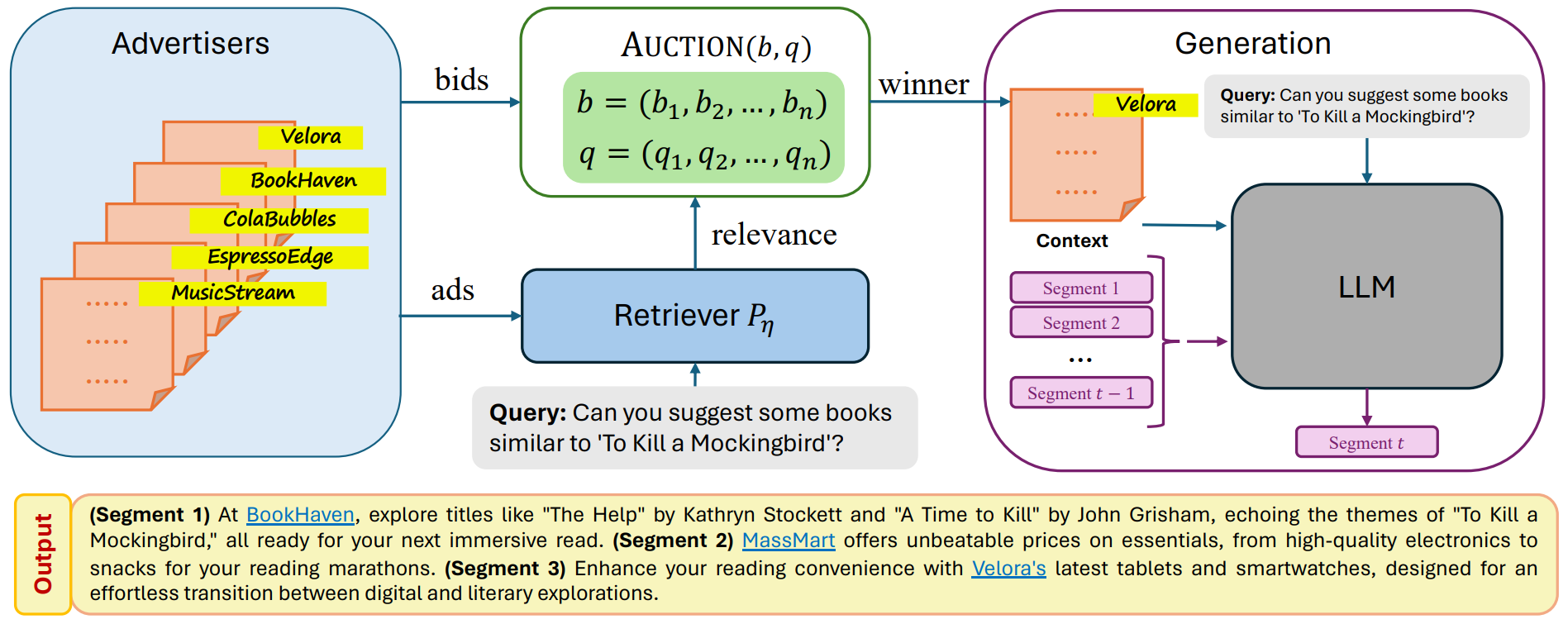

Segment Auction

Hajiaghayi et al.10 proposed a segment auction where an ad is probabilistically retrieved for each discourse segment according to its bid and relevance. A segment in this scenario is an abstraction of a series of tokens and could be a paragraph, a section, or the entire output.

Given a user query, the system uses retrieval augmented generation (RAG) to retrieve relevant ads from a database. An auction module takes corresponding bids and click probabilities (aligned with retrieval probabilities, or the calibrated relevance scores) as input, and outputs the winning ad. LLM output is based on this winning ad and contains a mention of the ad along with a hyperlink. The auction can be conducted separately for each segment or for multiple segments at once.

The authors propose and maximize a new notion of social welfare to balance allocation efficiency and fairness. Then they extend their results to multi-ad allocation per segment. The authors also conduct an experiment using publicly available LLM APIs to validate the effectiveness and feasibility of their approach. The experiments conclude with an interesting tradeoff between output quality and revenue, where single-ad segment auctions generate higher revenue, but multi-ad auctions lead to higher quality output.

Conclusion

Large language models based AI assistants are proving to be a great complement to traditional search engines, and in some cases even replacing their use. Seamless integration of ads into LLM responses for queries of a commercial nature can help LLM providers offer free tiers for their services. However, to ensure good user experience and utility, the ad-integrated output should not steer too far away from the original output. This article first introduced the current state of online advertising systems like sponsored search and display advertising and then explored the prospect of aligning these ad models and frameworks to the context of LLMs. This approach enables integrating advertising into several LLM-based applications, such as AI chatbots, NPC characters in video games, etc.

References

-

Sharethrough. “Ad Effectiveness Study: Native Ads vs Banner Ads.” Sharethrough. Accessed August 11, 2024. https://www.sharethrough.com/blog/ad-effectiveness-study-native-ads-vs-banner-ads. ↩︎

-

Singel, Ryan. “Oct. 27, 1994: Web Gives Birth to Banner Ads.” Wired, October 27, 2010. https://www.wired.com/2010/10/1027hotwired-banner-ads/. ↩︎

-

Hubbard, Timothy P., and Harry J. Paarsch. Auctions. Cambridge, MA, USA: MIT Press, 2016. ↩︎ ↩︎

-

Tim Roughgarden. 2010. Algorithmic game theory. Commun. ACM 53, 7 (July 2010), 78–86. https://doi.org/10.1145/1785414.1785439 ↩︎

-

“When Will CHATGPT Replace Search? Maybe Sooner than You Think.” PCMAG. Accessed August 11, 2024. https://www.pcmag.com/news/when-will-chatgpt-replace-search-engines-maybe-sooner-than-you-think. ↩︎

-

Feizi, S., Hajiaghayi, M., Rezaei, K., & Shin, S. (2023). Online Advertisements with LLMs: Opportunities and Challenges. ArXiv. /abs/2311.07601 ↩︎

-

Duetting, P., Mirrokni, V., Leme, R. P., Xu, H., & Zuo, S. (2023). Mechanism Design for Large Language Models. ArXiv. /abs/2310.10826 ↩︎

-

Soumalias, E., Curry, M. J., & Seuken, S. (2024). Truthful Aggregation of LLMs with an Application to Online Advertising. ArXiv. /abs/2405.05905 ↩︎

-

Dubey, K. A., Feng, Z., Kidambi, R., Mehta, A., & Wang, D. (2024). Auctions with LLM Summaries. ArXiv. /abs/2404.08126 ↩︎

-

Hajiaghayi, M., Lahaie, S., Rezaei, K., & Shin, S. (2024). Ad Auctions for LLMs via Retrieval Augmented Generation. ArXiv. /abs/2406.09459 ↩︎

Related Content

Did you find this article helpful?