A Guide to User Behavior Modeling

With the growing number of online services, recommender systems have become an essential tool for cutting through information overload and personalizing customer experience in various applications like online shopping or movie recommendations. Recommender systems predict customers’ interests using various data sources and give suggestions for future actions based on the inferred interests. Data sources include information about the users, items, and the interaction between users and items. The user-item interaction is of particular importance as it can identify user preferences and interests. This article describes how the users’ historical interactions can be used to characterize user behavior and build effective recommender systems.

Conceptual Definitions

Recommender systems (RecSys) customize and adapt to the users’ specific needs through explicit or implicit feedback from users. Explicit feedback regarding users’ preferences is usually scarce and considered a major bottleneck in RecSys domain. Hence the user interest is often modeled through feedback implicitly recorded in past user behavior, such as user behavior history and search patterns. These strategies for learning user interest have also been employed in other information retrieval domains, such as online advertising, web search, etc.

Next, we define the associated terms that will help in understanding and distinguishing the various types of user behavior1.

-

Behavior Object is the item or service that the user interacts with. It is usually an item or a set of items. It may also have associated information such as text descriptions, images, and interaction time.

-

Behavior Type is the action or the way the user interacts with the items or services. It may include, for example, search, click, add-to-cart, buy, share, etc. These logged behavior types are also called “channels”, through which user feedback is collected.

-

Behavior is the combination of a behavior type and a behavior object, i.e. a user interacting with a behavior object through a behavior type.

-

Behavior Sequence (or behavior session) is the trajectory consisting of multiple user behaviors.

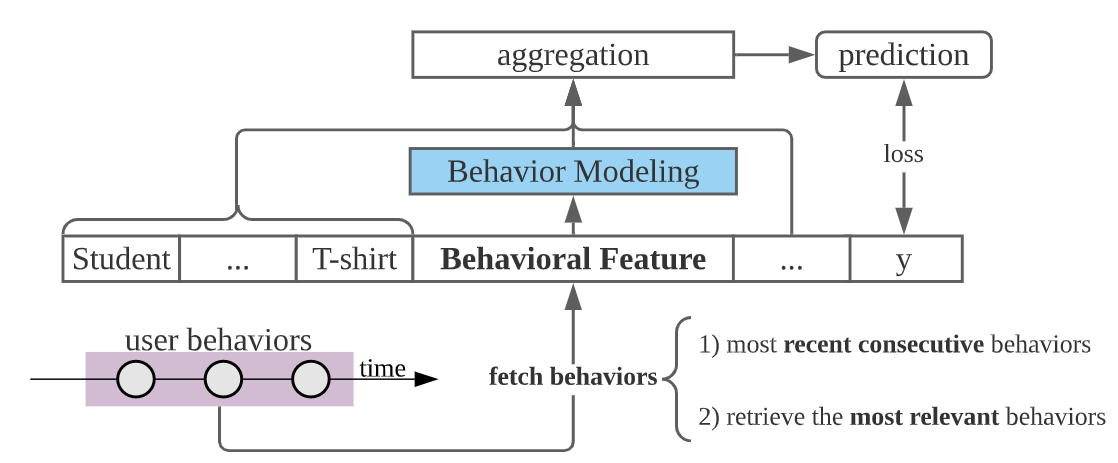

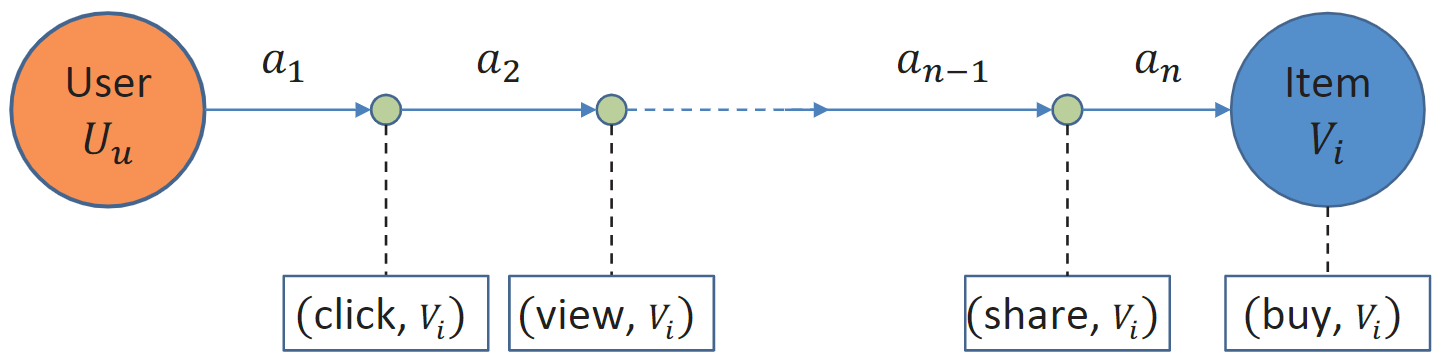

The following figure shows a general framework of user behavior modeling2.

User behaviors contain crucial patterns of user interests. Categorizing behavior sequences based on these patterns and exploring their respective modeling paradigm will be the theme of this article.

Static v/s Dynamic Behavior Modeling

In user behavior modeling (UBM) applications, the logged user data is usually organized as either structured static datasets (e.g., user-movie rating matrix) or unstructured sequences (e.g., the purchase history of customers)3. Traditional recommender systems are built using collaborative filtering or content-based filtering methods. These methods model the user-item interaction in a static way and only capture users’ general preferences4.

In contrast, Sequential Recommender Systems (SRSs) model the sequential dependencies over the user-item interactions by treating the user-item interactions as a dynamic sequence. Hence, while conventional static methods treat the behavior information as static data, like tabular data, SRSs model them as sequential data, like time-series data.

Static Representation Learning

In static user behavior modeling, latent user representations are learned from static data, such as the user-item interaction matrix or user-advertisement response matrix. These simplified methods do not utilize any temporal information.

- Shallow Learning Based Models: Traditional shallow learning models usually rely on statistical assumptions and learn low-dimensional linear or non-linear subspaces from high-dimensional data. Matrix factorization, an effective solution to collaborative filtering, is the most representative example of this approach. It works by decomposing a user response matrix into latent factor matrices. While it is a promising approach, it also suffers from the cold-start problem. Hence, more advanced factorization methods incorporate side information to learn latent factors. These factorization methods include Bayesian Matrix Factorization (BMF), Cross-domain Triadic Factorization (CDTF), Factorization Machine (FM), and Contextual Collaborative Filtering (CCF). These algorithms are simple, effective, and easy to implement and deploy, but they also have limited capability in modeling complex user behavior data.

- Deep Learning Based Models: Methods based on deep learning employ neural networks to extract latent information from input data. For use behavior modeling, these methods focus on learning latent representations for the user from the corresponding behavior data. These methods include algorithms such as Restricted Boltzmann Machine (RBM) based Ordinal RBM, Deep Belief Nets (DBN), auto-encoder based Deep Collaborative Filtering (DCF), Probabilistic Matrix Factorization (PMF), Marginalized Denoising Auto-Encoders (mDA). and feed-forward neural network based Neural Collaborative Filtering (NCF), Neural Factorization Machine (NFM). Compared with shallow learning, these methods are better at exploiting complex feature interactions, but they also struggle with issues like explainability and learning effective embeddings for categorical features.

Dynamic Representation Learning

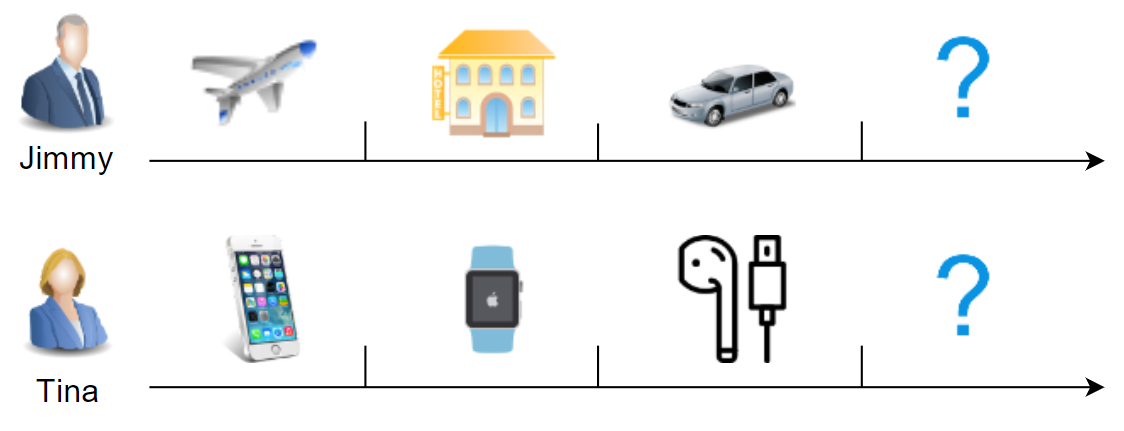

It is important to note that the behavior and preferences of users are dynamic and may change over time. Conventional systems like content-based or collaborative filtering cannot capture these sequential dependencies. They make the assumption that all user-item interactions in the historical sequences are equally important1. But in real-world scenarios, users’ next action depends on static long-term preference as well as the current intent which could be influenced by a small set of recent interactions.

Unlike static methods, dynamic representation learning methods, like Sequential Recommender Systems (SRSs), take the evolving nature of user behavior sequence into consideration. SRSs learn the evolution of user preferences and item popularity over time by modeling the sequential dependencies over the user-item interactions. This strategy leads to a more accurate characterization of user intent and item consumption trends, leading to more accurate and dynamic recommendations 4.

By treating the user-item interactions as a dynamic sequence, SRSs can capture the current and recent preferences of the user more effectively and flexibly through temporal factors. Session-based (or session-aware) recommendations are a subdomain of sequential (or sequence-aware) recommendations. SRSs can also simultaneously model users’ short-term interest in a session as well as the correlation of behavior patterns across different sessions1.

The rest of the article will focus on dynamic user behavior modeling. Traditionally, non-deep learning methods, such as Frequent Pattern Mining (Association Rules), Item-/session-based KNNs, Markov Chains, Dynamic Matrix Factorization, and Tensor Factorization have been utilized. However, Deep Learning based methods have been significantly more robust and performant. So, the article will mainly discuss recent deep learning based approaches.

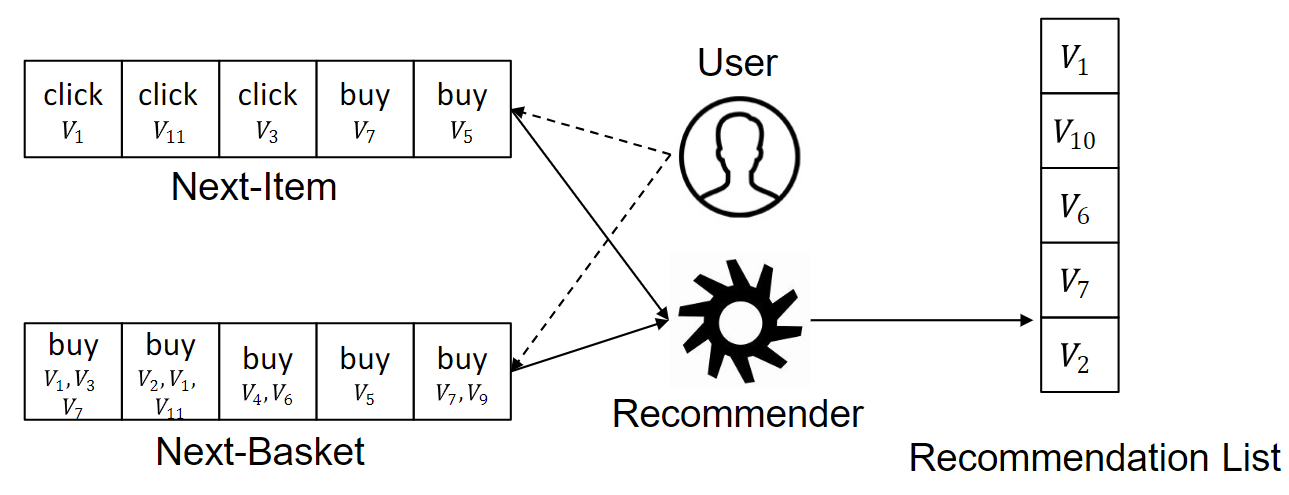

Next, we define two representative SRS tasks that take behavior trajectories as input:

- In the next-item recommendation, a behavior contains only one object or item, such as a product, a song, a movie, or a location.

- In the next-basket recommendation, a behavior contains more than one object.

Despite the difference in input, both tasks have the same goal of predicting the next item(s) for the user. The most popular form of the output is the top-N ranked item list. The rank could be determined by probabilities, absolute values, or relative rankings. Assuming $V_i$ refers to $i$-th item, the following figure depicts the two tasks1.

Formally, we define the sequential recommendation task as generating a personalized item list given user behavior sequence as input, i.e. $ (p_1, p_2, p_3, \dots, p_I ) = f(a_1, a_2, a_3, \dots, a_t,u)$, where ${a_1, a_2, a_3, \dots, a_t}$ is the input behavior sequence, $u$ refers to the corresponding user, $I$ is the number of candidate items, and $p_i$ is the probability that item $i$ will be liked by user $u$ at time $t+1$. Our goal is to learn a complex function $f$ to accurately predict the probability $p_i$.

Common Challenges with Modeling User Behavior

In real-world scenarios, the user behaviors are often diverse and complex. In this section, we take a look at some of the common challenges with modeling user behavior based on different data characteristics.

- Long Sequences: Long behavior sequences have higher chances of containing complex dependencies over multiple interactions. Such higher-order sequential dependencies are hard to capture using conventional models like Markov Chains and Factorization Machines. While deep learning models like RNNs can be used to handle moderately long sequences, these models also suffer from overly strong order assumptions 4.

- Flexible Order: In real-world data, not all adjacent interactions in a behavior sequence are sequentially dependent. For example, in a shopping sequence of {milk, butter, flour}, the order of ‘milk’ and ‘butter’ doesn’t matter, but the purchase of both items leads to a higher probability of buying ‘flour’ next. Models like CNNs with skip-connections have been used to avoid assuming strict order over user-item interactions.

- Noisy Items: A lot of behavior sequences may contain noisy and irrelevant interactions which impacts the quality of next-item prediction. For example, in a shopping sequence of {bacon, rose, eggs, bread}, the item ‘rose’ may be noisy and unlike ’eggs’ and ‘bread’ may not be a good predictor of the next item like ‘milk’. Attention models and retrieval-based models alleviate this problem by selectively utilizing information from behavior sequences.

- Heterogeneous Relations: A behavior sequence may contain different types of relations that convey different information and should be modeled differently. This is a rather less explored problem, and mixture models are the only solutions proposed to alleviate it.

- Hierarchical Relations: There could also be hierarchical dependencies in user-item interactions or their sub-sequences. Specialized models like hierarchical RNNs, hierarchical attention networks, hierarchical embedding models, and session-based recommenders have been devised to learn hierarchical dependencies.

Types of Behavior Sequences

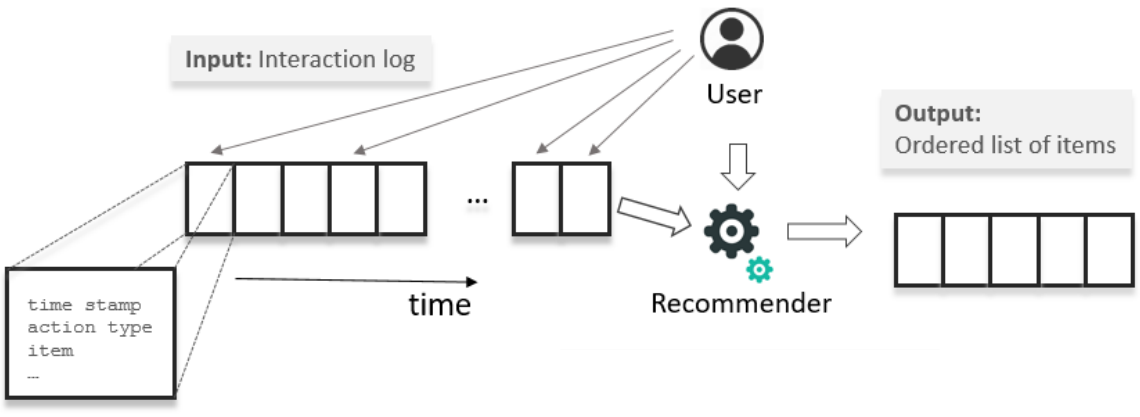

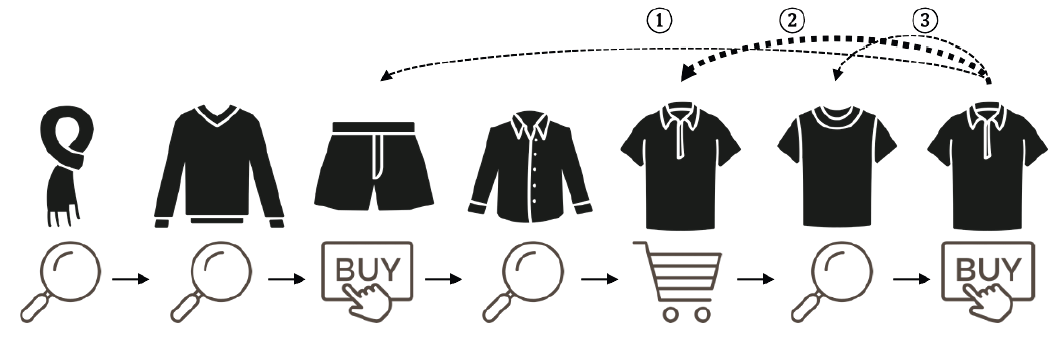

The behavior sequences of pre-defined sizes are fetched from users’ historical information, e.g., the previous 7-day logs. The behavior sequence consists of item IDs, contextual information, and the corresponding item features (if any). As shown in the next figure, the behavior feature is usually sorted sequentially in the temporal order5.

Implicit user behavior modeling is a broad topic and can be interpreted through various dimensions. In this section, we will categorize the existing algorithms in terms of three types of behavior sequences as per the taxonomy suggested by Fang et al1.

Experience-based Behavior Sequence

In an experience-based behavior sequence, a user may interact with the same object (e.g., item $v_i$ in the next figure) multiple times by different behavior types. Here ${a_1,a_2,\dots,a_n}$ is the behavior sequence, with each behavior ($a_i$) represented by a tuple of behavior type and behavior object.

For example, a user may search for an item, click on the item in the search results, then view the details of the item. The user may also share the item with their contacts, add the item to the cart, and purchase the item. All these behavior types and their order may indicate different user intentions. A sequence with only click and view behavior may indicate a low degree of user interest. Similarly, sharing the item before a purchase might imply a desire to obtain the item, whereas sharing an item after the purchase could indicate satisfaction with the item. Here, a recommender system (sometimes also called a multi-behavior recommendation model) is expected to capture the user’s underlying intentions and predict the next behavior type given an item.

Modeling Paradigms

Conventional approaches to modeling experience-based behavior sequences include Collective Matrix Factorization (CMF), Behavioral Factorization used by topic recommender at Google+6, Multi-feedback Bayesian Personalized Ranking (MF-BPR), Bayesian Personalized Ranking for Heterogeneous Implicit Feedback (BPRH), Element-wise Alternating Least Squares (eALS). These approaches leverage unary user feedback drawn from different channels.

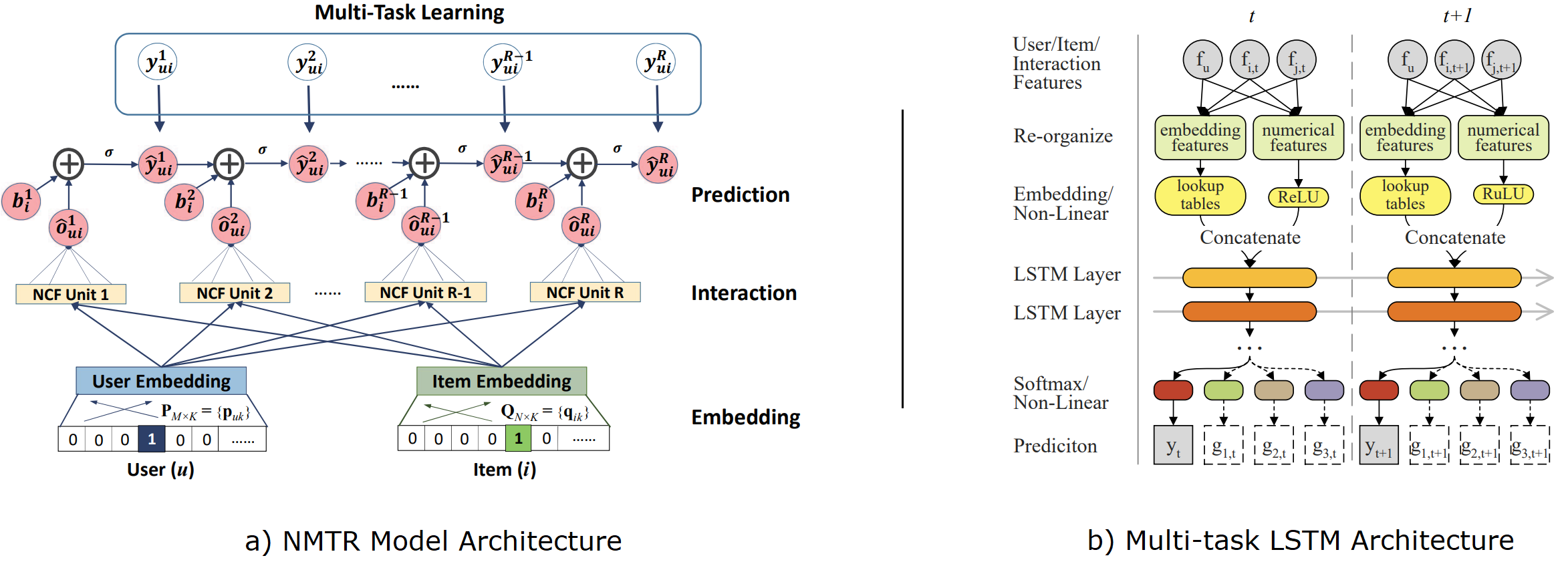

Deep learning techniques have also been explored for multi-behavior recommendation. For example, Neural Multi-task Recommendation7 (NMTR) performs a joint optimization based on the multi-task learning (MTL) framework, where optimization on each behavior is treated as a task. NMTR combines the advances in Neural Collaborative Filtering (NCF) and MTL in a design where a user and an item have shared embeddings across multiple types of behaviors. Similarly, Xia et al.8 proposed an MTL model with LSTM to explicitly model users’ purchase decision process by predicting the stage and decision of a user at a specific time.

Transaction-based Behavior Sequence

A transaction-based behavior sequence consists of different behavior objects that a user interacts with but with the same behavior type. In practice, buying an item is the most common behavior type of concern for recommenders in the e-commerce domain. Therefore, the goal of the recommender model here is to recommend the next item that a user will buy given the user’s historical transaction. This setup represents a conventional user behavior modeling task9.

Modeling Paradigms

For transaction-based behavior modeling, a recommender models the sequential dependency relationship between different items as well as user preferences. A significant amount of deep learning research has been done under this category.

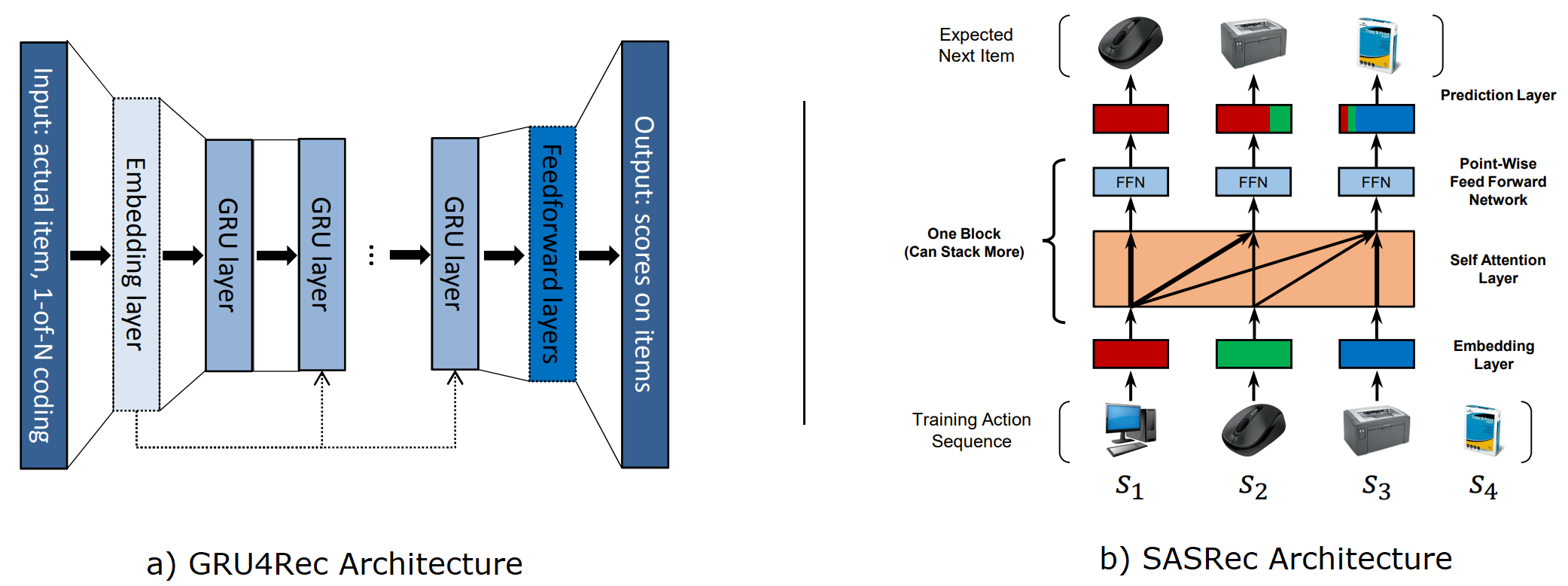

- RNN-based models: RNNs naturally capture the short and long-term dependencies of a sequence. GRU4Rec10 is one of the earliest models to apply RNN to model behavior sequences containing items from a session. Several follow-up studies extended the GRU4Rec model, for example, with data augmentation techniques or improving ranking loss function.

- CNN-based models: CNN-based models have been used to partially alleviate some of the shortcomings of RNN-based models, such as expensive computing costs and the inability to model long sequences. For example, 3D-CNNs have been applied in the e-commerce domain to predict the items that users might want to add to their shopping carts. Similarly, CNN-based Caser, CNN-Rec, and NextItNet models have been used to capture both short-term and long-term item dependencies.

- Attention-based models: Attention-based models have been successfully applied to identify more relevant items to users given their historical behavior. Neural Attentive Recommendation Machine (NARM), Deep Interest Network (DIN), Deep Interest Evolution Network (DIEN) and Attention-based Transaction Embedding Model (ATEM) are some of the representative examples of vanilla attention models in this category. Self-attention models for transaction-based behavior sequences have also attracted a lot of research work. Popular examples in this category include Sequential Deep Matching (SDM), Self-Attention based Sequential Recommendation Model (SASRec)11, Time interval aware SASRec (TiSASRec), Self-attention network for session-based recommendation (SANSR), Deep Session Interest Network (DSIN), Behavior Sequence Transformer (BST) and BERT4Rec.

Interaction-based Behavior Sequence

An interaction-based behavior sequence represents a mixture of experience-based and transaction-based behavior sequences, i.e. it consists of different behavior objects and different behavior types simultaneously.

This setup is much closer to real-world scenarios. The recommender model here is expected to learn different user interests expressed by different behavior types and preferences implied by different items. The goal of the model is to predict the next item that the user will interact with.

Modeling Paradigm

Recommender systems for interaction-based sequences are expected to capture both the sequential dependencies among different behaviors, and different items, as well as behaviors and items respectively. These methods aim to explicitly model subtle differences in behavior types. This is important as, for example, in e-commerce applications, purchase behavior is a stronger indicator of interest than click behavior. Similarly positive and negative ratings convey opposite semantics in product reviews. Existing research can be classified into the following categories based on how the cross-behavior relations are fused with intra-behavior relations9.

- Late-fusion Models: Late-fusion models use a two-step process to model the cross-behavior and intra-behavior relations. Representative examples of algorithms in this category include Neural Multi-task Recommendation (NMTR), Deep Multifaceted Transformers (DMT), Zoo of ranking models for multiple scenarios (ZEUS), and Graph Heterogenous Collaborative Filtering (GHCF). A common shortcoming with these methods is that they ignore the item-level cross-behavior modeling.

- Early-fusion Models: Early-fusion models learn the cross-behavior and intra-behavior relations jointly in a hybrid manner. Some of the works in this category include Recommendation framework from micro behavior perspective (RIB), Multi-Behavior Sequential Transformer Recommender (MB-STR), Parallel Information Sharing Network ($\pi$-Net), and graph learning methods like Multi-behavior Graph Convolutional Network (MBGCN), and Multi-behavior Graph Meta Network (MB-GMN). These models tend to perform well due to the exploration of cross- and intra-level dependencies at the item level. However, they also tend to be computationally expensive due to the model complexity.

Multi-Behavior Predictions

A unique challenge in modeling interaction-based behavior sequences is to predict multiple types of behaviors in the same model. This joint prediction is hard because label distributions of different behaviors may not be aligned in the same space or even mutually exlusive9. Methods like GHCF, Deep Intent Prediction Network (DIPN), and Domain-Aware GCN (DACGN) construct separate prediction modules (or MTL “heads”) for different behavior types. However, this strategy performs sub-optimally as it neglects task relations. Approaches like Multi-gate Mixture-of-Experts (MMoE) and Progressive Layer Extraction (PLE) alleviate the issue by promoting task relevance and suppressing task conflicts. Examples of such solutions include DMT, ZEUS, and MB-STR12.

Advanced Topics

Modeling Longer User Behavior Sequences

Online platforms, such as e-commerce websites, often accumulate a massive amount of user behavior data. Therefore, It is lucrative for several applications to mine patterns in more distant user history by inputting a very large history of user-item interactions. These interactions are usually very long (at least in thousands) hence the modeling process can also become prohibitively expensive in terms of computational requirements and latency. Longer sequences also tend to contain more noise. The solutions proposed to mitigate these challenges can be divided into the following two categories.

-

Memory-Augmented Models: Inspired by the memory-augmented networks in NLP, several recommender models employ external memory to store user representations, which are read and updated by a tailored neural network. For example, Neural Memory Recommender Networks (NMRN) proposed a GAN-based recommender that uses external memory to capture and store long-term interests and short-term dynamic interests in a streaming data scenario. Recommender Systems with External User Memory Networks (RUM) uses a FIFO-based memory to explicitly store user history, and applies an attention-mechanism over the matrix to compute next-item recommendation. Other examples of such models include Knowledge-enhanced Sequential Recommender (KSR), Hierarchical Periodic Memory Networks (HPMN)13, Memory-Augmented Transformer Networks (MATN), and User Interest Center (UIC).

-

Retrieval-based Models: Retrieval-based models provide an alternative to memory-augmented models that are more efficient, and easier to scale and deploy. Instead of using the large consecutive behavior sequence, retrieval-based techniques only retrieve the most relevant behavior. Hence, this approach alleviates the problems with time complexity for handling long sequences as well as the noisy signals in long sequences. For example, User Behavior Retrieval for CTR Prediction (UBR4CTR14) uses search engine techniques to retrieve the top-k relevant historical behaviors. It generates a query based on the target by using a parametric process and then uses the query to perform retrieval. Other representative models in this category include the Search-based Interest Model (SIM), End-to-End Target Attention (ETA), Sampling-based Deep Interest Modeling (SDIM) and Adversarial Filtering Model (ADFM).

User Behavior Retrieval for CTR Prediction (UBR4CTR) Framework

Modeling User Behavior with Side Information

User behavior sequences often contain rich side information with each behavior record. It could include information about the items, such as category, images, text descriptions, reviews, information related to behavior, such as dwell time, or any other contextual information. We can categorize the modeling approaches based on the following types of side information.

- Time information: In methods like SASRec, and BERT4Rec, time information is used to sort the behavior records, which influences the position encoding before the sequence modeling. Time Slice Self-Attention (TiSSA) and TiSASRec use the time interval between item pairs for attentive weight calculation. Several other approaches utilize dwell time to measure the importance of behavior objects.

- Item Attributes: Models like Feature-level Deeper Self-Attention Network (FDSA) and Transformer with Attention2D layer (Trans2D) propose approaches to jointly model item IDs and item attributes like category, brand, price, and description text for sequential recommendations.

- Multi-modal Information: Using multi-modal side information is a bit more complex. For example, the Parallel-RNN (p-RNN) model first extracts image features from video thumbnails and text features from product descriptions. Then, it applies NLP and Computer Vision (CV) approaches to learn multi-modal feature representations separately. Similarly, Sequential Multi-modal Information Transfer Network (SEMI) obtains video and text representations through pre-trained NLP and CV models.

Side Information Utilization

Models like Parallel-RNN (p-RNN) directly concatenate the learned vectors for item IDs and side information. However, fusing side information using simple operators like addition or concatenation may negatively impact the original item ID representations due to the heterogeneous nature of the side information. To address this, Non-invasive self-attention with BERT (NOVA-BERT) and Decoupled Side Information Fusion for Sequential Recommendation (DIF-SR) utilize side information to learn better attention distribution. Several self-supervised learning frameworks have also been proposed for effectively utilizing the side information15.

Summary

Recommender systems have become an integral part of a wide range of online services, such as news, entertainment, e-commerce, etc. These recommenders help in customizing and adapting the services to users’ specific needs. To achieve this personalization, deep learning models learn user intentions and preferences from behavior logs. The process of modeling user behavior or past user-item interactions has also been extended to other domains, such as CTR prediction and targeted advertisement. This article provided a comprehensive introduction to user behavior taxonomy, modeling strategies, representative works, open problems, and recent advancements.

References

-

Fang, H., Zhang, D., Shu, Y., & Guo, G. (2019). Deep Learning for Sequential Recommendation: Algorithms, Influential Factors, and Evaluations. ArXiv. /abs/1905.01997 ↩︎ ↩︎ ↩︎ ↩︎ ↩︎

-

Zhang, W., Qin, J., Guo, W., Tang, R., & He, X. (2021). Deep Learning for Click-Through Rate Estimation. ArXiv. /abs/2104.10584 ↩︎

-

Li, S., & Zhao, H. (2020). A Survey on Representation Learning for User Modeling. International Joint Conference on Artificial Intelligence. ↩︎

-

Wang, S., Hu, L., Wang, Y., Cao, L., Sheng, Q. Z., & Orgun, M. (2019). Sequential Recommender Systems: Challenges, Progress and Prospects. ArXiv. https://doi.org/10.24963/ijcai.2019/883 ↩︎ ↩︎ ↩︎

-

Quadrana, M., Cremonesi, P., & Jannach, D. (2018). Sequence-Aware Recommender Systems. ArXiv. /abs/1802.08452 ↩︎

-

Zhao, Z., Cheng, Z., Hong, L., & Chi, E.H. (2015). Improving User Topic Interest Profiles by Behavior Factorization. Proceedings of the 24th International Conference on World Wide Web. ↩︎

-

Gao, C., He, X., Gan, D., Chen, X., Feng, F., Li, Y., Chua, T., Yao, L., Song, Y., & Jin, D. (2018). Learning to Recommend with Multiple Cascading Behaviors. ArXiv. https://doi.org/10.1109/TKDE.2019.2958808 ↩︎

-

Xia, Q., Jiang, P., Sun, F., Zhang, Y., Wang, X., & Sui, Z. (2018). Modeling Consumer Buying Decision for Recommendation Based on Multi-Task Deep Learning. Proceedings of the 27th ACM International Conference on Information and Knowledge Management. ↩︎

-

He, Z., Liu, W., Guo, W., Qin, J., Zhang, Y., Hu, Y., & Tang, R. (2023). A Survey on User Behavior Modeling in Recommender Systems. ArXiv. /abs/2302.11087 ↩︎ ↩︎ ↩︎

-

Hidasi, B., Karatzoglou, A., Baltrunas, L., & Tikk, D. (2015). Session-based Recommendations with Recurrent Neural Networks. ArXiv. /abs/1511.06939 ↩︎

-

Kang, W., & McAuley, J. (2018). Self-Attentive Sequential Recommendation. ArXiv. /abs/1808.09781 ↩︎

-

Yuan, Enming & Guo, Wei & He, Zhicheng & Guo, Huifeng & Liu, Chengkai & Tang, Ruiming. (2022). Multi-Behavior Sequential Transformer Recommender. 1642-1652. 10.1145/3477495.3532023. ↩︎

-

Ren, K., Qin, J., Fang, Y., Zhang, W., Zheng, L., Bian, W., Zhou, G., Xu, J., Yu, Y., Zhu, X., & Gai, K. (2019). Lifelong Sequential Modeling with Personalized Memorization for User Response Prediction. ArXiv. https://doi.org/10.1145/3331184.3331230 ↩︎

-

Qin, J., Zhang, W., Wu, X., Jin, J., Fang, Y., & Yu, Y. (2020). User Behavior Retrieval for Click-Through Rate Prediction. ArXiv. https://doi.org/10.1145/3397271.3401440 ↩︎

-

Yoon, J.H., & Jang, B. (2023). Evolution of Deep Learning-Based Sequential Recommender Systems: From Current Trends to New Perspectives. IEEE Access, 11, 54265-54279. ↩︎

Related Content

Did you find this article helpful?